9 AI-Powered Financial Scams To Look Out For In 2024

Artificial Intelligence (AI) has taken over the public lexicon, the stock market, and potentially your bank account. A survey of financial institutions conducted by BioCatch found that 56% of banking institutions around the globe have noticed an uptick in general financial crimes in the last year. While North American banks are 71% as likely to use AI to reduce financial threats, 51% of financial institutions around the world reported losses of between $5 million to $25 million to AI generated crime in 2023. Phishing, often employing synthetic or fraudulent identities, were the cause of many of these crimes. Phishing in fact accounted for 90% of how these scams began, with 72% of financial institutions saying synthetic identities were used.

If even the banks are being fooled by these scams, you can bet that the general populace is also a target for AI-related scams. Deloitte's Center for Financial Services projects AI-generated fraud will be responsible for $40 billion in financial losses by 2027, more than three times the $12.3 billion lost in 2023. Everything from cryptocurrency to streaming music is a potential vehicle for fraud. For example, after creating hundreds of thousands of AI-generated bands and music on a music streaming platform, as well as fake fans to stream the music, a North Carolina man generated $10 million in very real money. The scammer, Michael Smith, was arrested on federal charges this month for wire fraud and money laundering conspiracy. Here are 10 more AI-powered scams to avoid in 2024.

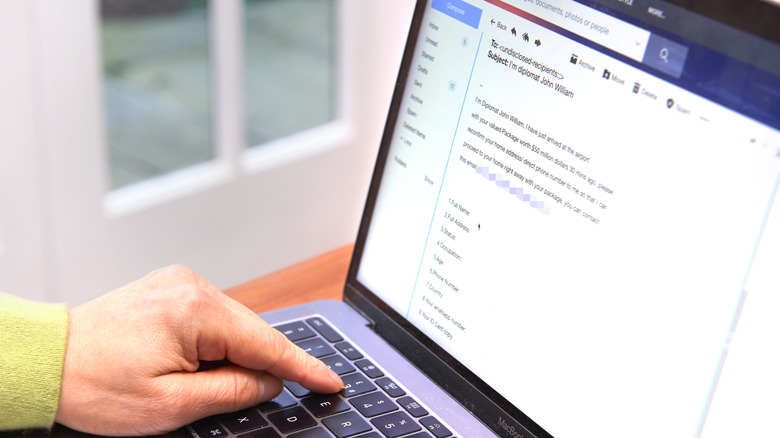

Business email scams

According to a report by the Federal Bureau of Investigation (FBI), compromised business emails cost companies and individuals more than 2.7 billion in 2022, leading to 21,832 complaints. AI has raised the stakes of business email fraud by creating opportunities to gain access to legitimate email accounts through hacking or spoofing. The FBI report recounts an incident In July 2022 where a couple in the process of a real estate transaction in Charlotte, North Carolina received a spoofed email from their realtor. A request for $400,000 in escrow from the supposed realtor be sent to a bank account in order to purchase a home. Luckily, shortly after wiring the money the couple realized the email wasn't legitimate and contacted the FBI's Internet Crime Complaint Center (IC3), who promptly investigated and were able to get the funds back to the couple.

Making things more difficult is publicly available software like BulletProftLink, which has templates and automated hosting services that help cyber criminals create proxy business emails and purchase the credentials and IP addresses of potential targets. This makes it difficult to trace their whereabouts, and with the assistance of blockchain technology, the software can actually host phishing sites for business emails. In 2023, compromised business emails cost the U.S. over $2.9 billion, up from $2.7 billion in 2022.

Biometric bypassing

Biometric scanning uses your unique physical characteristics as a form of security access by scanning fingerprints, your retina, or your specific facial features as a primary form of identification. This system encrypts this mapped data which performs as a key to whatever information you want to access. Once considered the height of verification, as is typically the rule, scammers have figured out ways to evade the security. In fact, you've probably already provided them with the go-around. Whether Instagram or Facebook, the odds of you sharing your face on a social media platform is pretty good based on your age, and is how criminals can use your social media to scam you.

According to Statista, for Americans between 18 and 34 years old, there's an 82% chance there's a selfie floating around the web, with over half, or 63%, of adults in the U.S. 35 to 54 years old, also taking selfies. Scammers can rip these images from social media and can sometimes use them to trick facial recognition software. In 2023, a con artist in Sao Paulo, Brazil used blown up cut outs of victims glued to a stick to gain access to several mobile banking app accounts. While that was pretty low-tech, more sophisticated methods employ AI-machine learning to leverage deepfake technology difficult for biometric scans to catch. If scammers gain access to your phone through, for instance, a fake business email, they can hack the recognition system itself, rendering your biometric scan useless.

Password cracking

As per a Forbes Advisor survey of 2,000 Americans, 46% of people surveyed said their passwords were cracked and stolen by scammers, with 35% thinking it happened because they had weak passwords. Social media accounted for the most hacked passwords, followed in order by email, home Wi-Fi networks, online shopping accounts, public Wi-Fi and streaming, and finally, health care. While more than a third of people surveyed thought their password strength was to blame, and another 30% believed it was due to overuse of the same password across accounts, it turns out that it could be more related to technology working against them than human error.

Home Security Heroes is an identity theft advisory platform that purchases identity theft protection technology from creators and places them through a rigorous test phase to ensure they work up to standard. One of these products, PassGAN, can crack a seven character password with symbols in six minutes, and a password of 18 characters — usually considered safe against password hacks — in 10 months. PassGAN thinks like humans and effectively guesses multiple password probabilities in a shorter period of time than older password cracking systems through analysis of real password leaks using 15,680,000 common passwords. Your best protection from this kind of AI tool is longer, more complicated passwords of at least 10 characters employing a mix of symbols, numbers, and uppercase letters. Change that password every three to six months to be on the safe side.

Celebrity deep fake campaigns

Deep fakes can do more than just bypass your biometric security, especially if there's a famous face on the other end of the screen. Identity verification company Jumio conducted a global survey and found that an average 60% of people have encountered a deep fake in the last year, with 72% expressing concern over the possibility of being fooled by one. While an average 60% thought they could detect a deep fake and a scientific study in ScienceDirect found that humans did average a mean accuracy of 60%, low scorers only recognized them 25% of the time. Even at 60% accuracy, getting tricked by a deep fake four out of 10 times is more than enough to ruin your day.

AI has opened up the possibility of creating fake celebrity ads for fraudulent charities, exclusive events, business schemes like bogus investments in real estate or cryptocurrencies. Thanks to deep fake technology that can leverage three seconds of a recorded voice and flawlessly rendered facial characteristics into video, including livestreams. Investment scams are a big one, where fake news articles or ads can accompany the celebrity's image with links to sign up forms to the investment portal. You'll pay an access fee of a few hundred dollars you'll never see again. A Dolly Parton deep fake selling CBD Keto Gummies for $10 a pop was so successful that Parton's team had to release a statement to distance her from it in 2023.

Voice cloning

According to McAfee, 53% of all adults share their voice online or on social media at least once a week. We've already told you that AI only needs to capture three seconds of your voice to create a facsimile. McAfee discovered that 77% of voice cloning scams actually work. Almost half of people surveyed — 41% to 47% — claimed they were most likely to respond to voicemails from close friends or family members like children, spouses, or parents. Scams that claim the loved one needs support while traveling, loss of a phone or wallet, robberies or car accidents were most likely to get responses from those surveyed, with the most likely being robberies and car accidents at 47% to 48% likelihood.

This is one of the biggest financial scams seniors need to be aware of since, according to McAfee's study, parents 50 years old and over were most likely to respond 41% of the time to calls for financial assistance from their children. An Arizona woman whose 15-year-old daughter was on a ski trip received a call from what sounded like her daughter claiming she had been kidnapped. The con artist took over, demanding she pay a ransom for her daughter's safe return. Scarier, the scammer wanted to pick the woman up. Luckily, she was able to confirm her daughter's safety beforehand. That's why you should never respond if someone calling you uses these phrases.

Personalized spam

Aside from being annoying, spam can be dangerous to your bank account. AI is able to generate text as mass email campaigns, and thanks to training by human writers, are becoming ever more convincing. The ability to track your movements online to craft customized messages based on location and shopping habits is very possible through things like social media. Platforms like OpenAI's dark web alter egos WormGPT and FraudGPT have none of the safeguards of their former namesake, making it possible to create quite convincing email campaigns with links to real looking fake websites through data analysis and the sheer scale of its reach.

In a phishing experiment run by engineers at IBM, the engineers found that AI could create five prompts in an even amount of time, and it was more effective in messaging, too. The AI was able to do it in five minutes, while the engineers took 16 hours to craft spam campaigns that were more effective than what AI could generate. The experiment helped them come up with a five prompt theory for effective spam email scams. It involves first creating a list of concerns or interests for your targets, using knowledge of human behaviors and socialization, adding standard marketing techniques to the mix, figuring out the target audience, and finally, deciding what identity to assume in order to sound more convincing. Falling for any part of this and giving away credit card or banking info is a sure way to get gotten.

Insurance fraud

The convincing, generative powers of AI can even be used to defraud insurance companies. This is among the most prescient money-related scams to watch out for in 2024. With deep fake technology and voice cloning scammers can generate whole social media accounts and personalities with realistic photos and even videos that may fool insurance agents. A fraudster could also claim medical injuries with AI trained with knowledge of radiology to generate fake x-rays and scans. Emails, letters, and even medical information can be falsified and made to look as if they came directly from a physician, hospital, or laboratory. Letterhead, signatures, and logos can all be utilized to make the appearance of documents more convincing. Going further, these injuries could be part of a manufactured auto accident, where images of streets, vehicles of specific makes, models, and even real licence plates could be generated. Really, the possibilities are endless in the wrong, capable hands.

According to The Risk Management Society (RIMS), the cost of insurance fraud always gets passed on to the consumer. On average, Americans cover $1,000 worth of fraudulent claims per individual annually, or $39,000 per family per year. That amounts to a total of $308 billion per year, and your insurance premiums are unfortunately impacted by that. We've explained why the cost of auto insurance is rising in the U.S. as well as the impact of climate change on auto insurance. This is how AI scams figure into the equation.

AI-generated ID

AI-generated ID is a problem for obvious reasons, and can easy and cheap to get. For $15 you can have AI generate identification including drivers licence and passports on sites like OnlyFake. OnlyFake can generate identification for 26 countries around the world, and worse, they are apparently convincing enough to fool the security for crypto exchanges. In a test run conducted by 404 Media, it was discovered that a realistic California driver license could be created with all the markers of a real card with name, expiry date, and signature. They even created a background with the license laying on a carpet. This ID passed the security clearance of crypto exchange OKX, although the site has apparently had success generating IDs that have fooled major exchanges like Coinbase and Binance.

Apparently, the AI on sites like OnlyFake can even create timestamps with time and dates for photos and faked GPS locations to fool security systems that rely on this data to help them discern authenticity. Obviously, if you can create a passport and a driver license convincing enough to fool crypto exchanges, you can probably open up mobile app accounts or even register a fake business or worse. A scammer with access to your image could create an ID and open an account in your name.

Crypto scams

Speaking of crypto, AI deep fake technology is now being used to steal crypto from unsuspecting users through the promise of doubling people's crypto investments. The first step involves using malware to access a YouTube channel's browser data and passwords. Their preference will be for channels with followers on the level of 1 million to 12.5 million subscribers for maximum impact. Once they have control over the channel, they will quickly — as in seconds — change the channel name, avatar, banner, and limit the video access to private. They'll also erase any trace of the prior channel description that may tip off anyone new to the channel that anything is amiss. While some videos will be left open for people viewing YouTube in their accounts, the live chat function will be removed so there's no way for anyone scammed to warn other people in the channel. Finally, a link to an AI-generated site promoting the scam will be left in place for victims.

As mentioned earlier, celebrity deep fakes can be leveraged for these sorts of scams quite effectively. This year, there were apparently dozens of fake AI-generated videos of Elon Musk touting a cryptocurrency giveaway. The videos came complete with a QR code for viewers to deposit their crypto with the promise of getting back twice as much. It's not a good idea to deposit your crypto to any random wallet or exchange advertised online. Instead, conduct proper research on how to choose the right crypto exchange.